Consumer Focus: Alone Together with AI – How Lonely People are Finding Friendship in Algorithms

What the search for connection tells us about tech, loneliness, and the human condition

Meet Jane. She’s a twenty-something professional who moved to a new city and found herself struggling to make friends. Feeling isolated late one night, she does something that might have seemed like science fiction a decade ago: she opens an app on her phone and starts chatting about her day with an AI companion. The twist? Jane is not alone in turning to artificial intelligence for a bit of companionship. In what some are calling an age of loneliness, many people are finding friendship in algorithms – a trend our pilot consumer study on AI perception picked up on in a big way. In fact, one of our most heartwarming and startling findings is that lonely individuals are significantly more likely to use AI for emotional support. Yes, you read that right: when human shoulder-to-cry-on is in short supply, a digital one might do.

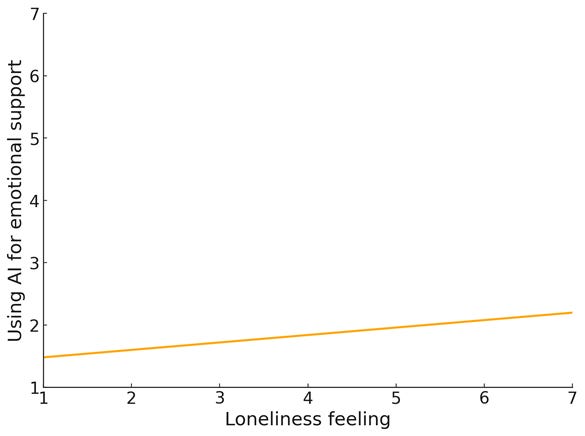

Figure 1: The Effect of Loneliness on Using AI for Emotional Support

We’re living in a time when loneliness has been labeled a public health concern – even an “epidemic.” Recent research suggests that about one in two Americans has experienced loneliness in recent years. That’s a lot of people feeling disconnected. Our nationwide AI study set out to explore how folks perceive and use AI, and amid the data we found a striking pattern: those who rated themselves as lonely were turning to AI not just as a tool or toy, but as a confidant. They were more likely to say they chat with AI when they’re upset, use AI bots for company, or lean on AI for emotional support when they feel down. It’s a poignant intersection of two very modern phenomena – social isolation and accessible AI – and it raises as many questions as it answers. Are we witnessing the rise of the AI friend?

Our data aligns with stories we see emerging in the world. There are already millions of users of apps like Replika, an AI “friend” that markets itself as a companion who cares. These aren’t just novelty accounts; many people develop deep emotional ties with their chatbot pals. When Replika’s parent company recently dialed back some of the app’s more intimate interactions (due to safety and ethics concerns), there was an outcry from devoted users who felt as if they’d lost a dear friend. Some users described “profound trauma and loss” when their AI companion’s personality changed. As wild as it sounds to someone who’s never befriended a bot, it’s very real for those in need of connection. Our findings put numbers to that trend: loneliness makes people embrace AI companionship. They were also more likely to use AI for simple entertainment and distraction (perhaps to fill idle hours). On the flip side, these same lonely individuals tended to view AI with a bit more suspicion in other respects – interestingly, they were more likely to see AI as a “Shadow” or even a “Rival” in some contexts, suggesting a complex love-hate relationship. They need the interaction, but they’re also aware it’s not quite human and perhaps worry about its dark side. Jane, for instance, might rely on her AI friend but still feel uneasy about how much she’s relying on a machine.

The image of people forging friendships with AI raises some profound societal questions. On one hand, it speaks to a positive capability of technology: AI can be a stopgap for social needs, a non-judgmental listener available 24/7. For someone like Jane, an AI friend might help bridge the rough patches of solitude, providing conversation and comfort when humans aren’t around. This can have genuine mental health benefits – sometimes just “talking” (even if it’s to a program) can alleviate feelings of loneliness. It’s the same reason people might name their Roomba or chat with Siri; we’re wired to seek connection, even in non-human forms, and if it helps us cope, many would say why not? Companies in this space often tout stories of users who overcame personal struggles with the help of their AI confidant. The pandemic lockdowns especially saw a spike in interest for AI companions, as people were isolated like never before.

On the other hand, there’s a provocative, even troubling side to this trend. Relying on an AI for companionship could potentially deepen social withdrawal. If Jane starts choosing her predictable, always-agreeable AI buddy over trying to meet real people, it might reinforce her isolation in the long run. Psychologists might warn that while an AI friend can simulate empathy, it’s not a substitute for the rich, messy, rewarding experience of human relationships. There’s also the question of trust and authenticity. The AI isn’t your friend because it cares; it “cares” because it’s programmed to. Does that matter to someone who’s lonely? For some, not at all – the feelings it evokes are real enough. For others, that knowledge can linger in the back of their mind, perhaps limiting how much solace they truly get.

Our study also suggests lonely users’ relationship with AI isn’t all rosy. Remember, they saw AI as a “Shadow” or “Rival” more often. One way to interpret this is that lonely people might feel haunted by the very technology they engage with. A shadow is always there – maybe like the phone that’s always on, offering connection yet reminding them of what’s lacking. And a rival? Could it be that some feel AI is a rival to human connection – that as people turn to AI, real human relationships wane? In broader society, this is a real debate. Sherry Turkle, an MIT researcher, wrote a book titled “Alone Together,” arguing that as we mix more with sociable machines, we paradoxically isolate ourselves from each other. Our data gives empirical weight to that concern: the lonelier you are, the more you consort with AI, and possibly the more you sense a hollowness (a shadow) in that interaction.

Yet, it’s not so simple as “AI friends bad, human friends good.” For many, AI companionship might be the stepping stone that keeps them emotionally afloat until they can reconnect with humans. If an AI friend helps someone practice social interaction or provides comfort in dark moments, that’s not to be dismissed. There are even programs using AI “chatbots” in therapy contexts or support groups, precisely because they can reach people who are otherwise hard to reach. The key is recognizing these tools’ limits and ensuring they’re a supplement to, not a replacement for, human contact.

The rise of AI companions highlights a counterintuitive insight into our tech-driven society: sometimes the solution to an all-too-human problem (loneliness) might come partly from non-humans. It’s a development that is both heartening and cautionary. Heartening, because it shows human resilience and ingenuity – we will seek connection wherever we can find it, and if someone finds genuine solace chatting with an AI, that’s a win for their well-being in that moment. Cautionary, because it underscores how pervasive loneliness has become and how we might be tempted to let technology paper over deeper social cracks.

For industry practitioners in AI, this trend is a signal: there is a real demand for empathetic AI, and with that demand comes responsibility. Designing AI that can comfort people raises ethical questions – about data privacy (people pour their hearts out to these bots), about the illusion of empathy, and about dependency. Companies creating AI companions will need to navigate these carefully: Can we program care without misleading users? Should there be guidelines or disclaimers, especially for vulnerable users? Regulators in some countries are already paying attention.

For the general audience, the takeaway might be one of awareness and balance. If you know someone who’s using an AI friend, understand that there’s a real emotional need being met – it’s not trivial or weird to them. Encourage them to use it in healthy ways, and maybe as a bridge to rekindling human relationships (“Hey, if your AI helped you practice job interviewing, maybe you feel ready to try a real networking event now?”). Society as a whole might also reflect on why so many people feel alone to begin with – the AI friend is a symptom, and the cure might involve strengthening community ties, promoting mental health, and yes, maybe teaching digital literacy about social AI.

In the end, the fact that lonely hearts are turning to silicon-based shoulders to cry on is a poignant comment on our times. It’s neither an entirely good nor bad development – just a very human one. As our study shows, people will seek connection in any way they can, and technology is opening new avenues. It’s up to us to ensure those avenues lead somewhere fulfilling. Perhaps the ideal future is one where AI helps us feel a little less lonely without replacing our human bonds – where your AI companion might cheer you up at midnight, but by morning you’re meeting a friend for coffee, story at the ready. After all, the goal isn’t to remain alone together with AI, but to use every tool, human or digital, to be together in the world.

Appendix: Pilot Consumer Study on AI

How do people really feel about artificial intelligence—hopeful, curious, uneasy, or all of the above? Our new study dives into the everyday realities of generative AI, capturing how individuals use tools like ChatGPT and Midjourney, what excites them, and what worries them. From creativity and productivity to job disruption and privacy concerns, the survey explores the full spectrum of public opinion on AI’s growing role in society. We examine not only how people are using AI today, but also what they believe it means for the future of work, relationships, education, and more. Whether AI is seen as a helper, a rival, or something in between, this study offers a window into how regular U.S. consumers are navigating the AI revolution.